Most things in web development are difficult.

I started this post two weeks ago as a simple “How to use SSR to boost performance” article. After hours of profiling and consulting people smarter than me, I know one thing. Server-side Rendering is more nuanced than you would like.

You’ve probably been told that server-side rendering your JavaScript framework site will boost performance. But is that the truth? This article is going to test this assumption. Spoiler alert: the answer is maybe.

This is not a “beware SSR” article. SSR can still help with performance in certain scenarios. You just need to remember why you want to use SSR in the first place.

JavaScript handcuffs the content of a single-page app

The default setup for a single-page app is an “app” element with some scripts. The “app” element inflates into meaningful content once the scripts run.

<html>

<head>

<title>My non ssr app</title>

</head>

<body>

<!-- "my-app" is a white page until these big scripts load -->

<my-app></my-app>

<script src="big-script.876aa678as463sf7.js"></script>

<script src="other-big-script.876aa678as463sf7.js"></script>

</body>

</html>This is not an optimal loading pattern. The browser is able to render a page with just HTML and CSS. We could have content on the screen before the browser ever touches a script.

The point of SSR is a faster render of meaningful content

The goal of SSR: offset the cost of tying up meaningful content in JavaScript. If JavaScript ties up the content, you can expect to stare at a white screen longer than desired. What if you could have your cake and eat it too?

What if you sent down a static HTML version of the app while JavaScript loads? Once the JavaScript is ready, it can take over the page and everyone is happy. Problem solved, right?

Compare SSR vs. Non-SSR versions

I built a fake shoe site called “Shoeniversal”. You either love or hate the name. I built it with Angular Universal, but the concepts apply to other frameworks that support SSR. You can view the source on Github.

I published two versions of the site to Firebase Hosting. One version is server-side rendered and the other is not.

The Non-SSR site is static. The SSR site is static with a dynamic twist. Cloud Functions generates the SSR site and then sends it to a CDN edge. The edge caches the server rendered content as if it were a static site. This way you can expect a similar delivery for each site.

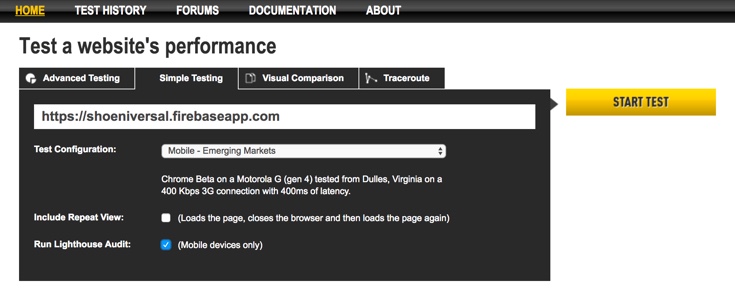

To get a fair test I used Webpagetest. WPT is great because it allows you to test on real mobile devices, on slow networks, in different parts of the world… for free.

Disclaimer

I want to point out that this article uses data found only while building this sample. SSR is a fuzzy topic. As a community we’re still trying to figure out proper guidance on implementation. This article is an attempt to pitch in towards this effort. Don’t hesitate to start a conversation if you find any errors or have any comments.

Test on real devices on slow networks

I ran a set of tests on “Emerging Markets” (EM) using a Moto G4. The latency on the EM setting is brutal. Getting to the server and back from the phone costs 400ms. This doesn’t even include download time of the asset.

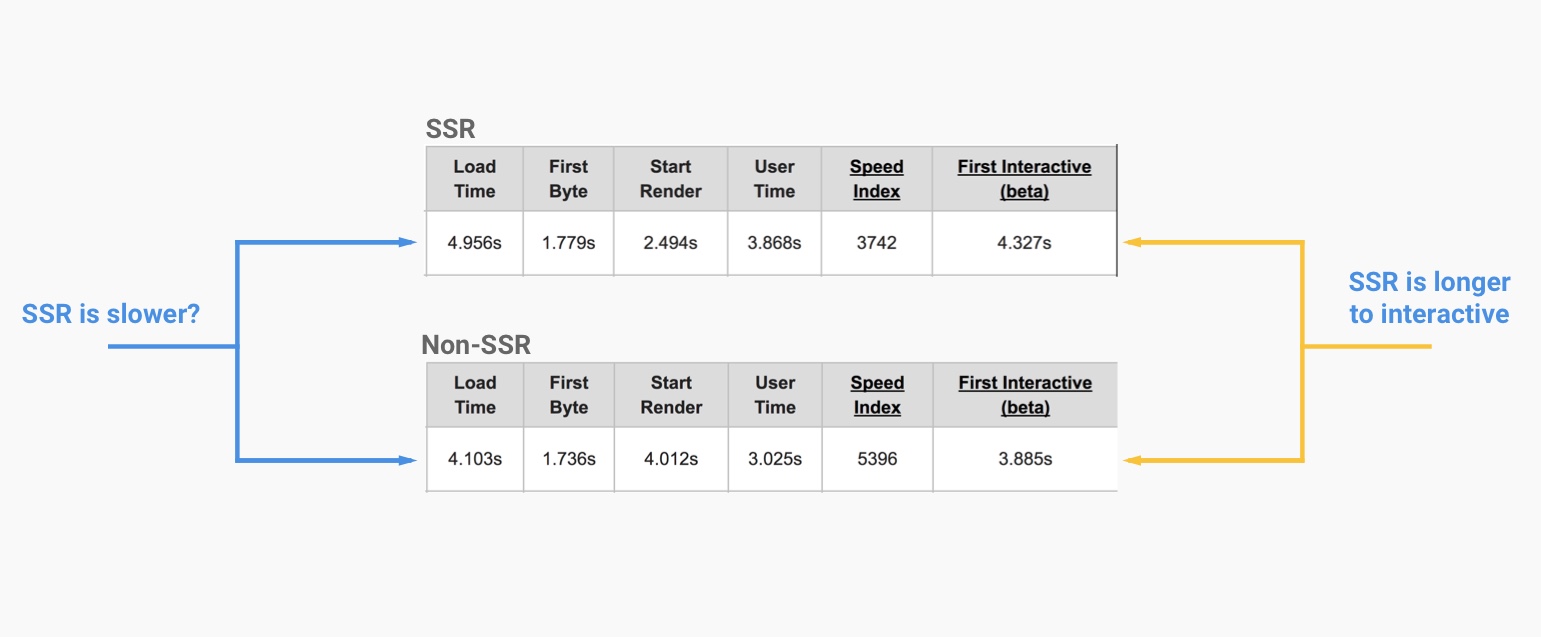

Given these parameters I figured that the SSR site would win. The initial results proved me wrong.

The SSR version was slower

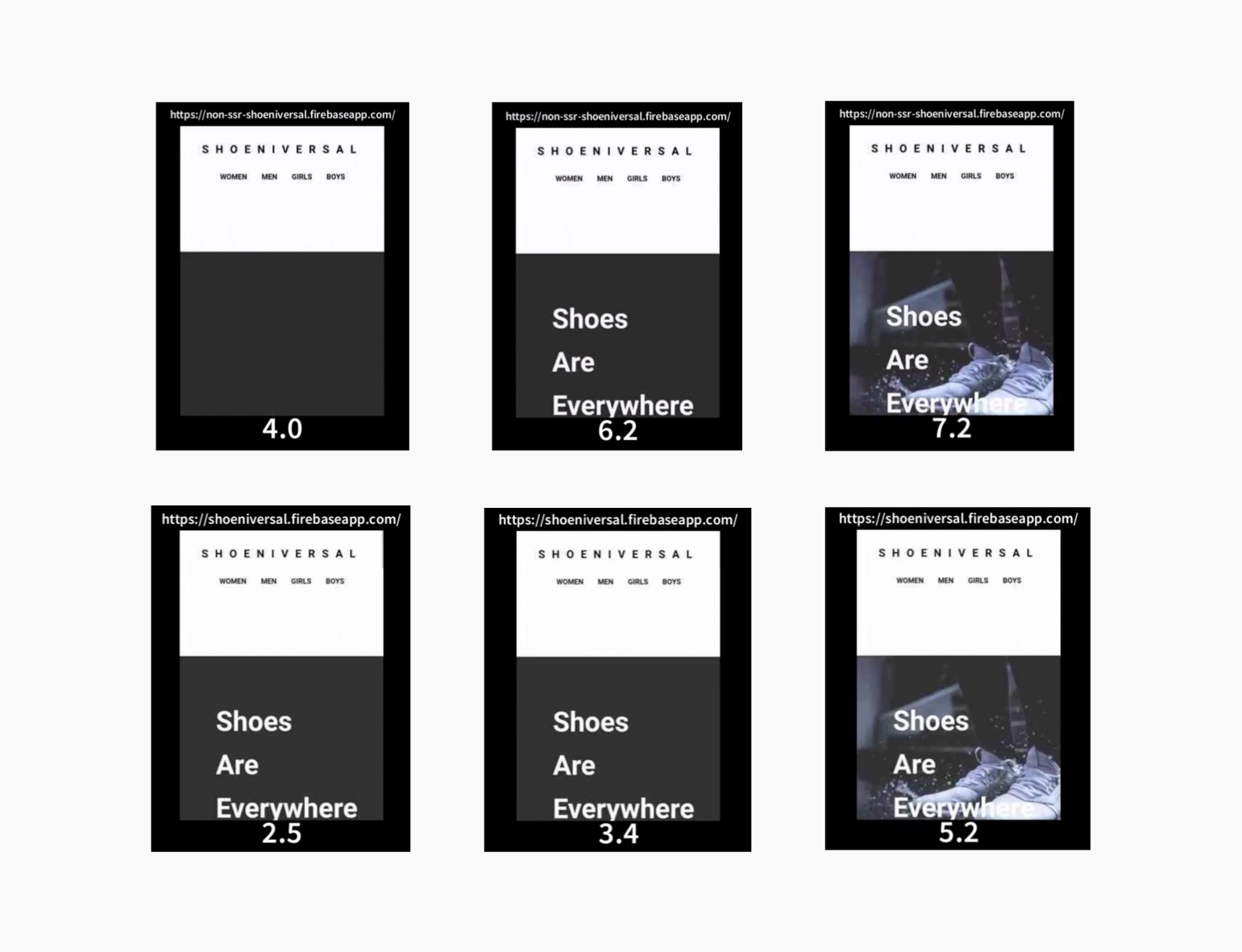

Non-SSR test run. SSR test run.

Test after test Webpagetest gave me better metrics for the Non-SSR site. The Load Time and Interactive times were better. Something stood out to me. Start Render was and Speed Index were faster on the SSR site. This didn’t add up.

How is it possible to obtain a faster paint but result in a slower load time? How can performance start to deteriorate when you begin incorporating best practices? If you really want to see what’s going on, you’ll need to give it the old eye test.

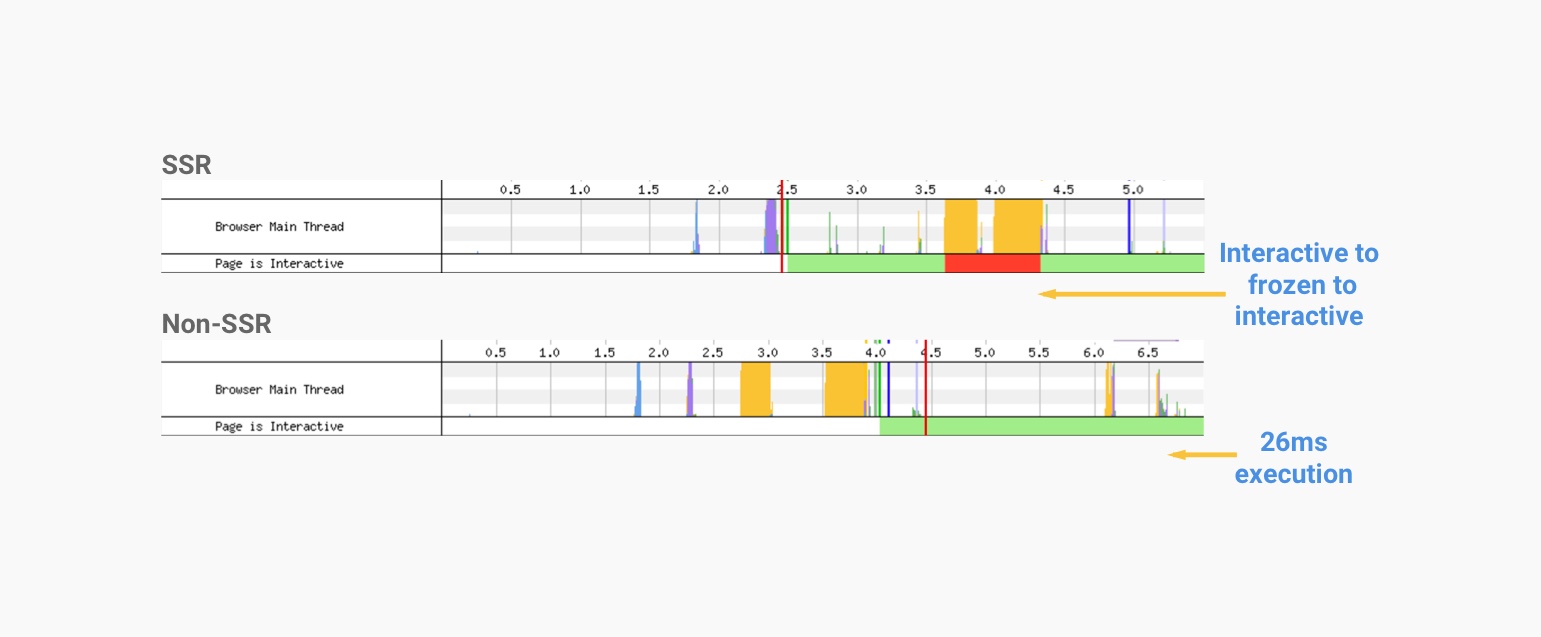

SSR sites become interactive and then block the main thread again

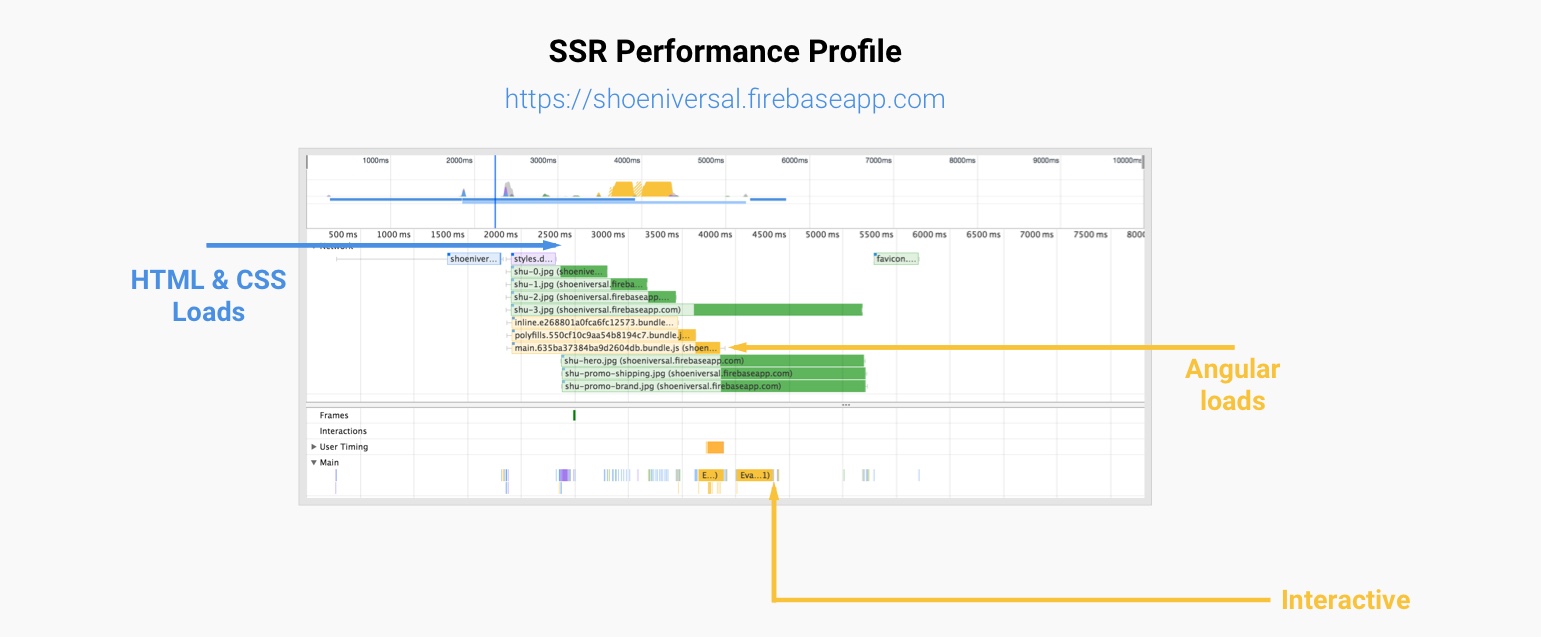

Take a look at the Chrome DevTools timeline for the SSR site.

- ~2400ms: HTML and CSS loads.The meaningful content appears.

- ~3800ms: Angular, its polyfills, and the application code loads.

- ~4300ms: JavaScript execution finishes. The site is interactive.

- ~4900ms: Load Time. The Document Complete event fires.

- Timeline

We’ve played a trick on the browser. At 2.4 seconds the browser considers the site interactive for just a moment. Then comes the JavaScript.

At about 3.6 seconds JavaScript execution begins and locks up the main thread until 4.3 seconds. This is a bait-and-switch. The browser thinks it’s ready to go, but then the JavaScript comes and freezes the site.

Webpagetest tells us the load time is 4.9 seconds. However, we’re able to see the full app at 2.4 seconds and interact with it at 4.3 seconds. The “Load Time” metric is not that important here.

The timeline and the initial metrics tell a similar story. However, that isn’t the case for the Non-SSR version.

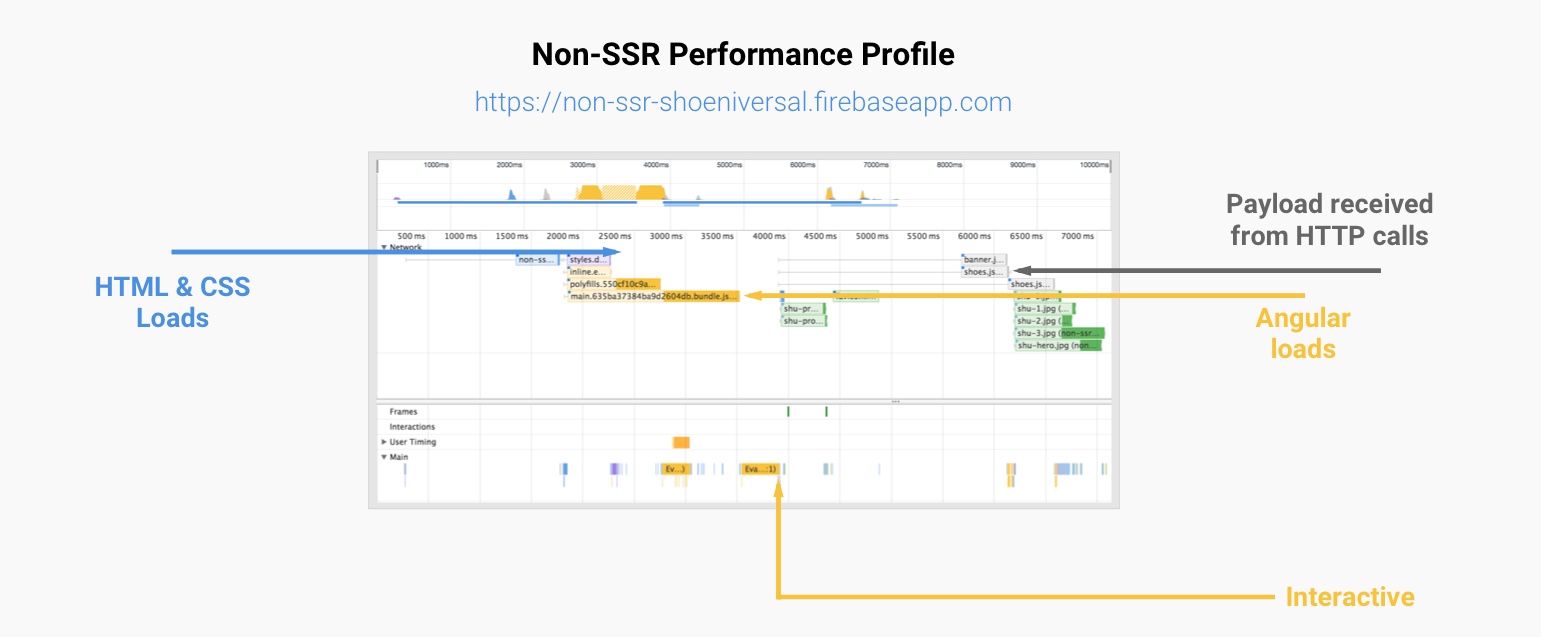

Late HTTP requests are tricky for interactivity metrics

The Non-SSR site may have faster “Load Time” and interactive metrics, but that doesn’t tell the whole story.

- ~2400ms: HTML and CSS loads. No meaningful content.

- ~3500ms: Angular, its polyfills, and the application code loads.

- ~3800ms: JavaScript execution finishes. Site is reported as interactive.

- ~4100ms: Load time. The Document Complete event fires. Framework sends HTTP requests for data.

- ~6200ms: Payload received from API calls. Angular renders the meaningful content.

- Timeline

This does not look like a 4.1 second “Load Time”. Yes, at four seconds the Document Complete event fired. However, this did not reflect reality. This app has HTTP requests to retrieve the data and those don’t finish until 6.2 seconds. And still, the browser needs to download and render the hero image.

This is a tricky situation. The metrics tell you the Non-SSR is faster to interactive. However, you can see that the site is unusable until 6.2 seconds and the SSR version is interactive at 4.3 seconds.

This a soft example. There’s at least something to paint in the Non-SSR version. Imagine if the API call blocked the entire render. There’s another API call for content below the fold that doesn’t finish until 6.6 seconds. If the site is issuing all these API calls, why didn’t they push back interactivity?

JavaScript execution under 50ms doesn’t affect interactivity

You might consider this an unfair test. The SSR version doesn’t make client-side API calls. However, this is a benefit of SSR. API calls are executed server-side and the application state is transferred to the client. The API calls must be made on the Non-SSR site to retrieve the data. This is what tricks our interactivity metrics.

Time to Interactive (TTI) tells us how the length of time between navigation and interactivity. The metric is defined by looking at a five second window. In this window no JavaScript tasks can take longer than 50ms. If a task over 50ms occurs, the search for a five second window starts over.

After the API calls finished, it took only 26ms to execute the JavaScript that rendered content to the page. This did not trigger a push back in interactivity. This puts the user in an uncanny valley. The site is interactive but it’s useless until the data returns.

- It’s also worth pointing out that Angular was able to process this change in 26ms on a low powered device.

SSR won in this scenario

In this scenario SSR was beneficial. The cost of waiting for API calls to finish on the client was too high. The Non-SSR site became interactive sooner. But, an interactive site without meaningful content isn’t really the interactive we’re looking for.

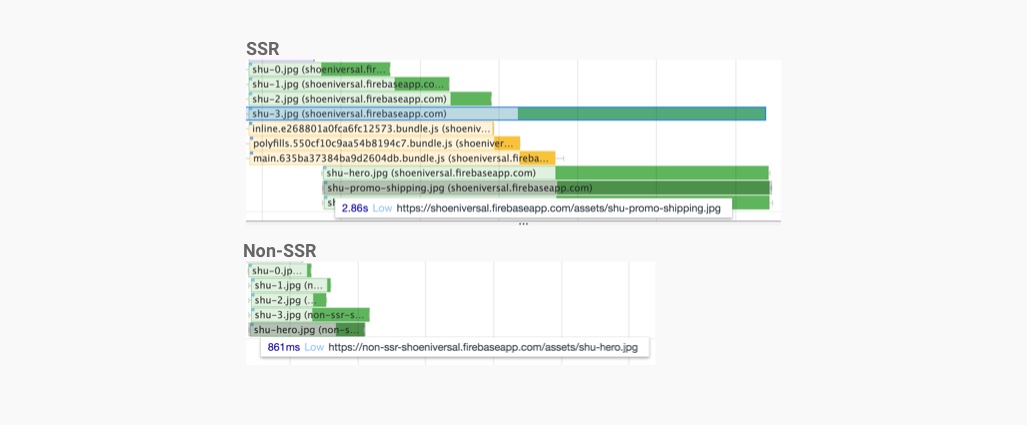

SSR comes at a cost

On high latency connections round-trip time is an expensive tax on performance. Server-side rendering delivers the content in a single piece. This keeps the user from waiting on several HTTP calls to see the completed site.

However, it does come at a cost. The user may have a jarring experience on the page. The page will go from interactive to frozen and back to interactive.

The SSR site slowed the processing of the images. The SSR site processed the images while the main thread was busy. This took an extra 2-3 seconds.

Despite this slow processing, the SSR site’s images appeared sooner than the Non-SSR site’s images. This is due to the late discovery of the images in the Non-SSR version.

Takeaway #1: SSR can improve performance when HTTP calls block a meanginful paint

In this scenario SSR was a benefit because it shorten the chain of requests needed to use the site. The most important being no HTTP calls for data. However, if your site is not dependent on client-side HTTP calls for data, then SSR may not benefit you.

Takeaway #2: Use the eye test

Pre-classified metrics are a good signal for measuring performance. At the end of the day though, they may not properly reflect your site’s performance story. Profile each possibility and give it the eye test.

Takeaway #3: Choose static where possible

The best way to prioritize content by building a static site. Ask yourself if the content needs JavaScript. Shoeniversal is a “read-only” landing page, it should be static. I can still use Angular Universal to server side render this app. Making the site static is easy as dropping the JavaScript tags from the document.

Without JavaScript the site loads and is interactive in 2 seconds.

SSR is not “one-size-fits-all”

Server-side rendering is a great technique for prioritizing content. However, it’s far from having your cake and eating it too. It’s not going to boost everyone’s performance. Use your judgement to choose the technique that best fits your situation.

Outline

- Most things in web development are difficult.

- JavaScript handcuffs the content of a single-page app

- The point of SSR is a faster render of meaningful content

- Compare SSR vs. Non-SSR versions

- Disclaimer

- Test on real devices on slow networks

- The SSR version was slower

- SSR sites become interactive and then block the main thread again

- Late HTTP requests are tricky for interactivity metrics

- JavaScript execution under 50ms doesn’t affect interactivity

- SSR won in this scenario

- SSR comes at a cost

- Takeaway #1: SSR can improve performance when HTTP calls block a meanginful paint

- Takeaway #2: Use the eye test

- Takeaway #3: Choose static where possible

- SSR is not “one-size-fits-all”